DISCLAIMER

I’m a doomer. I see risks where there likely aren’t any in many cases. At the same time,I do not think stable diffusion or GPT or llama or any of the tools on the market come remotely close to AGI, but I think there’s no technical reason why we can’t get there. I also think that once we reach AGI, we have every reason to believe that ASI is right around the corner. I’m not really worried about all of that though, because, to be honest, if we make it that far I will be pleasantly surprised.

A common refrain in the normie-adjacent discourse is “tell me how, exactly, AI can destroy humanity?” The ea/acc (whatever that means) crowd implicitly believes this is plausible, but preventable, and the doomer crowd thinks it’s all but inevitable. It’s a fair criticism though, that no one seems to have an explanation for the mechanism of this danger, other than “something something manmade horrors beyond our comprehension.” To answer this question, I’m going to do something controversial, and move the goal posts. “Destroying humanity” is a ridiculous bar to set to declare a technology dangerous! Plus, I’m sure you will agree, there’s plenty of scenarios between “AI becomes the salvation of Humanity” (also ridiculous) and “AI causes human extinction” that absolutely SUCK for humanity. So in that spirit, I present to you a simple, plausible scenario, where current LLMs and next-token-generators can destroy modern human civilization.

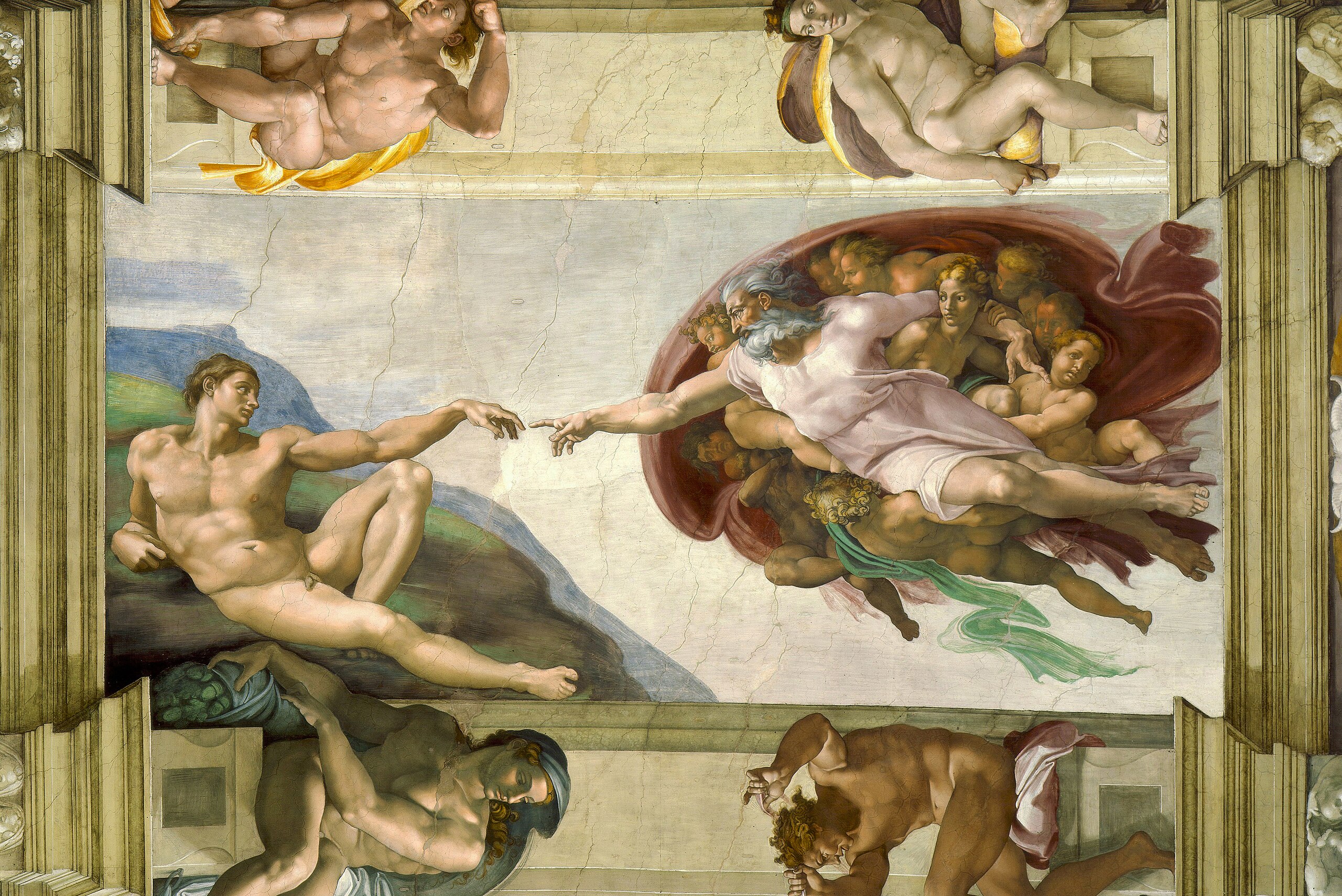

To be absolutely clear, if this scenario, or one like it plays out, humanity will not go extinct, the Earth will not be consumed by an all encompassing paperclip maximizing fire, and in the long term, humanity may very well emerge from the crisis stronger than ever. That being said, billions would die, the current world order would be completely upended, and our recovery could take a thousand years. So it’s not an “existential risk” but it’s certainly a risk of “biblical” proportions. You might dismiss me now that I’ve outright stated I cannot imagine how midjourney and chat-GPT wipe out humanity, but you might also consider that the collapse of civilization-as-we-know-it might seriously but a damper on your retirement plans.

So what is this doom? It’s simple: they take down the internet. More realistically, they simply ruin it and make it unusable. You may have heard the claim that a superintelligence cannot create super harms unless it is also superembodied. In other words, a single boston dynamics robot, no matter how intelligent, couldn’t take over the world, but a swarm of them easily might. I think that’s a very good and true argument. I also think that there’s a corollary to that: the more we virtualize our society, the more vulnerable we are to virtual actors. There’s no end to the damage that virtual actors could deal to our virtual infrastructure, with incalculable real-world consequences. Imagine, for a moment, if tomorrow all electronic payment methods disappeared. What would that do to the world economy? What would be the downstream impacts of that destabilization?

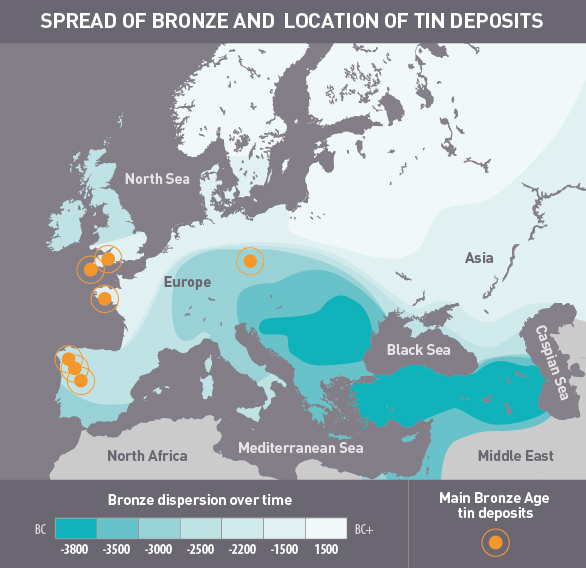

If you’re not familiar with it, I suggest reading up on the Bronze Age Collapse. The anxiety our ancestors felt during that time have reverberated for thousands of years in our collective consciousness immortalized by ancient foundational texts. I bring it up because it is a simpler, easier to understand version of a disruption to vital infrastructure setting humanity back by a thousand years. You see, bronze age technology was very ea/acc for its time. You had better weapons yes, but also tools to build things and farm. Yields are going up. Nations are popping out Wonders of the World left and right. Depending on who you ask, Atlantis was literally peaking around this time (and they had even BETTER technology than we do today!). A whole global supply chain was set up around this new Bronze trade.

For the uninitiated, Bronze is an alloy of copper and tin. As it turns out, copper was very plentiful around this time, but there were only a few tin mines in the whole world propping up this entire global industry. So long story short some stuff happened (I subscribe to the deluge & giants theory, but you’re free to believe the more academically palatable ones, such as climate change, sea peoples, and plague) and the tin production gets disrupted. No tin, so no bronze. And of course humanity had lived for hundreds of thousands of years without Bronze, so losing it was no biggie and they easily scaled back their lifestyles and moved on. No, I’m kidding. Like 30% of the global population died and almost every extant government either collapsed or completely lost all of their hegemony. It was very bad. Though humanity didn’t go extinct, plenty of cultures did.

Whatever theory you subscribe to, the general consensus is that something happened that disrupted the flow of tin, and chaos ensued naturally from that event. We got our own Bronze age going today that we call the “information era” and our tin mines are factories in Taiwan that only like 3 people in the world fully understand. In many ways, our setup today is more fragile than the Bronze age analog. Basically every productive enterprise on Earth is leveraging the internet in some way. We need it for diplomacy and governance, and at individual level so much of our social lives have been completely virtualized. I bring this up because most of my argument is dedicated to how AI can bring ruin to the internet. It is my hope that you will see the clear link between losing the internet and the collapse of civilization-as-we-know-it. If I lose you there then nothing about what I am going to say will be compelling to you, but if you’re with me so far then let’s proceed.

How does AI ruin the internet?

I’m sure you’ve heard of the Dead Internet theory. If you haven’t, allow an AI to explain it to you:

The Dead Internet Theory posits that the majority of the internet is either generated or populated by artificial intelligence bots rather than real human users. Proponents believe that since the mid-2000s, real human content and interactions have been increasingly replaced by automated scripts or AI. This has allegedly been done to manipulate public opinion, boost engagement, or for other nefarious purposes. Critics, however, argue that while bots are indeed prevalent online, the internet remains dominantly human-driven. The theory remains a controversial and largely unproven conspiracy.

-Chat-GPT 4

I think this is pretty undeniable, even if chat GPT says it’s just a conspiracy theory. If nothing else, the content on the old internet felt more “authentic” than it does today, and corporations sure do sponsor a lot of the content. This future I am about to posit is an extension of the Dead Internet. I call it the Undead Internet. To give you an example of how the Internet becomes Undead, I’ll start with a narrow example and hopefully we can extrapolate it outwards: job seeking and hiring. Up until the early 2000s, the primary mode of job seeking was in print media. You would browse the classifieds or see a help wanted ad in a shop window. You’d have a typed up resume you might send via the mail. If you were really avant-garde your resume might be printed or submitted by fax. A really forward minded company might have had a phone interview, but for the most part it was all in person.

Fast forward to today, and the process is entirely virtual. You make a resume online. You fill out an online job application where you answer a bunch of question and basically reformat your resume into these dumb required fields that ask you questions that are traditionally answered by a resume. There might be a personality test where you have to pretend that wage slavery doesn’t upset you, and then ultimately some algorithm is going to parse through thousands of these applications and give you a score, before passing you off to a human at some point to actually talk to you. You might talk on the phone, but you’re probably talking on zoom or something like that, especially for your first interview. The whole thing is online today–you basically can’t get a job any other way, and it’s infuriating.

So what does the avant-garde job seeker do today? Well increasingly people recognize that their resumes have to serve the dual purpose of satisfying the algorithm gatekeeper and the human hiring manager. So as a result, increasingly people are turning to algorithms of their own to optimize their resumes. But of course it won’t stop there. Remember how annoying those applications are? How you have to undergo the ritual humiliation of copying content from a document they literally asked for into a form they require, all to prove that you can be a good little bureaucrat? Well GPT-4 and puppeteer can automate that easily. And as long as it’s automated, you might as well apply to every conceivable job that has some payment threshold regardless of your actual requirements. To be clear: you are actually incentivized to do this, and if you don’t do it, from a game theoretic standpoint, you will be a loser in the job market. We’re starting to see this already, and companies need to respond!

Out of those thousands of applications, maybe 5% are legit candidates for the given role. Nobody has time to go through all of them separating the wheat from the chaff, so the companies increasingly deploy ever more sophisticated AIs to do this. We’re seeing entire companies born for this very purpose. Within the next three years, mark my words, the first phone screening will no longer be with an HR person, but with an AI. I bet you anything.

Okay but I’m a job seeker and this is very annoying. I applied to hundreds of jobs; I don’t have time for hundreds of AI phone interviews, so I’ll use my own AI to ace those. People are already using AI transcription services to beat coding questions in interviews, how long before they just have the AI do the interview? The technology is reallllly close to supporting that already. At a certain point in this AI arms race, either the companies or the job seekers are going to realize, “wow, I’m going through a lot of effort just to identify real people I can talk to in this process, and that’s before I even begin the work of figuring out if anyone is the right fit for me.” At some point, they have to decide to take the whole process offline.

And so you can see how an AI arms race all parties are incentivized to participate in can ruin the internet for a use-case it currently dominates completely. The AI doesn’t need to get sentient or get it’s own ideas to ruin online hiring, it just has to be available to people who need to get a job. No doubt some people might even create autonomous unsupervised programs that exist to constantly apply for jobs. It’s easy to keep them running, and they only need to interview for the ones they really find themselves interested in.

This is also readily applicable to dating, and, again, we can already see stuff like this happening here in the present day. Would you keep using an app to meet someone if you had thousands of matches but 99% of them were bots trying to sell you something? of the 1% that are humans, 50% of them are using AI to spoof their appearances, or write their bios, such that you really don’t know anything about these people truly until you actually meet them. What’s the utility of online dating in that world?

Go to ebay. 90% of the listings are fake and so are 95% of the bids. Armies of bots are creating fake markets online to artificially drive up prices by manufacturing the appearance of demand. People are incentivized to do this and already do it at a small scale, but AI will make it easy, scalable, and deployable to any cottage industry that does business online. These are just the things that are happening today with present technology. Who knows what eldritch horrors of fraud, waste, and abuse we will wake up as the technology expands.

The internet may seem like its primarily a place of entertainment, and to a certain extent it seems like it doesn’t matter who manufactures our entertainment as long as it’s entertaining. I can see why artists are particularly nonplussed by these latest developments with generative AI when that’s the prevailing attitude. But if things keep going this way, you’ll soon see the real value of the internet: authentically connecting with real people. If things reach the point where you can hop on a video call with someone that you’ve been texting for months and you’re still not sure if you can trust that they’re actually human, well that’s a problem.

How can I trust if my online banking representative is a real person, let alone a real representative of my bank? How do I know if this listing even actually exists? Don’t get me started on the news (which, again, we are already seeing in the present day). When the trust goes, the utility of the internet as a platform goes with it. The fake AI agents mass produce more content that us real human beings ever could, and the internet becomes overrun by the undead. Abandoned by humans, Albanian crypto bots try to hustle Russian sex bots who in turn try to get classified information from fake tinder profiles that say they work at Ratheon. This is the Undead Internet.

The Undead Internet according to Chat GPT is abandoned and still inhabited by sad looking bots desperately trying to scrape high quality data.

Maybe you’re not convinced that this sort of thing could cause people to actually abandon the internet en masse, and maybe you’re right. I would also be remiss if I didn’t point out that all of these are theoretically solvable problems, but trust once lost is not so easy to regain, and who knows if we will solve them in time? Without having a magically sentient AI, and without even getting into malicious actors (all of the use-cases I presented are self-enriching–don’t forget, some people are straight up terrorists who want the world to burn), I have presented a real scenario where this technology becomes radically destabilizing to our way of life. This is pretty much using a present-day level of technology at future levels of adoption. This is just one, low effort thought experiment with minimal imagination. If you start to add in gains in technological capabilities, the possibilities of humans and AIs co-creating powerful new demonic ideologies out of their crazy feedback loops of communication, and the consequences of those ideologies, things can get really dark really quickly.